Common Issues & Unique Solutions for Oracle Apps Products

Tuesday, February 22, 2022

Oracle database performance notes

Master Note: Database Performance Overview (Doc ID 402983.1)

SQL Tuning Health-Check Script (SQLHC) (Doc ID 1366133.1)

Achieving Optimal Performance with Oracle E-Business Suite (Doc ID 2125596.1)

Oracle E-Business Suite Performance Guide (Doc ID 1672174.1)

E-Business Applications Performance Advanced Skills Cookbook (Doc ID 1562454.1)

TFA Collector - TFA with Database Support Tools Bundle (Doc ID 1513912.1)

Wednesday, April 11, 2018

Friday, March 23, 2018

Blocking session in database oracle EBS R12.1

Today we had session locking issues reported in EBS PROD via OEM incident manager.

Total db time 1,039 seconds is consumed by 1 sessions blocked by session SID,SERIAL:916,57869

SID SERIAL# SQL_ID MODULE EVENT

Total db time 1,039 seconds is consumed by 1 sessions blocked by session SID,SERIAL:916,57869

After monitoring , we identified that SID 916 was holding the SID 1544.

INST_ID SESS

---------- ----------------

1 Holder: 916

1 Waiter: 1544

The session from SID 916 was workflow notification session and it was holding another workflow notification session.

------- ---------- ------------ ---------------------------------------- ----------------

916 57869 976jzgdb06abj e:ICX:bes:oracle.apps.wf.notification.se latch: shared pool

1544 23035 3c4awu4jgqy2u e:ICX:bes:oracle.apps.wf.notification.se enq: TX - row lock contention

Found the sql that was associated with sql_id : 976jzgdb06abj and then found out the bind variable values for that sql statement. Actually the sessions were going in a infinite loop and it had done 786703676 executions since 08/04/2017 Fri 12:09:05.

I took development team's help to find out if there are some workflow approval requisitions that were stuck based on below sql statement. Then we found an issue with HR record table where our Company CEO ( XX ) has a supervisor as YY and strangely YY has supervisor as XX in system. So the WF session was unable to find out who was the supervisor to assign the requisition and it was going in continuous loop.

Development team removed the super visor details of CEO ( XX) in EBS system and the loop was closed. The session were cleared in PROD.

SQL statement:

SELECT SUPERVISOR_ID FROM PER_ALL_ASSIGNMENTS_F WHERE PER_ALL_ASSIGNMENTS_F.PERSON_

Monday, February 19, 2018

Gather stats slowness on partitioned table

In our environment, We have a common Oracle Home 12.1.0.2 for our Unit & QA database hosted on same database server where we are gathering stats on a partitioned (156 partitions) table manually using below command. The table has incremental stats enabled in both Unit & QA environment and there is an partitioned index PK_1894 (156 partition) on the table. The testing team is loading the data in exact same way in both the environments and we confirmed that they are not loading or updating data in older partitions.

dbms_stats.set_table_prefs('OIDFATM','FCT_COMMON_POLICY_SUMMARY','INCREMENTAL','TRUE');

dbms_stats.gather_table_stats(ownname=>'OIDFATM',tabname=>'FCT_COMMON_POLICY_SUMMARY',cascade=>TRUE,degree => DBMS_STATS.DEFAULT_DEGREE);

In unit environment the gather stats job is taking 35 mins and in QA env it is completing within 2 mins. Upon further analysis we found that in Unit the gather stats job is gathering stats for all 156 partitions both on table & index causing longer run time. In QA env the gather stats job is just gathering stats only for 4-5 partitioning due to which it is completing within 2 min.

I wanted to check why does optimizer think that the stats are stale for all partitions for UNIT database.

Further investigation revealed that Histogram creation on few columns are being generating as developement team has been running some select aggregasion query that was accessing multiple partitions in UNIT region. As histograms are gathered , hence gather stats program was gathering stats on all partitions causing longer run time. Testing team was not running those SQL in QA region. I decided to stop gathering histogram in UNIT database and after that the program is completed 2 min.

dbms_stats.gather_table_stats(ownname=>'OIDFATM',tabname=>’FCT_COMMON_POLICY_SUMMARY’, METHOD_OPT=>'for all columns size 1', cascade=>TRUE, degree => DBMS_STATS.DEFAULT_DEGREE);

METHOD_OPT=>'for all columns size 1' is omitting histograms creation. In our QA environment, so far histograms are not created.

dbms_stats.set_table_prefs('OIDFATM','FCT_COMMON_POLICY_SUMMARY','INCREMENTAL','TRUE');

dbms_stats.gather_table_stats(ownname=>'OIDFATM',tabname=>'FCT_COMMON_POLICY_SUMMARY',cascade=>TRUE,degree => DBMS_STATS.DEFAULT_DEGREE);

In unit environment the gather stats job is taking 35 mins and in QA env it is completing within 2 mins. Upon further analysis we found that in Unit the gather stats job is gathering stats for all 156 partitions both on table & index causing longer run time. In QA env the gather stats job is just gathering stats only for 4-5 partitioning due to which it is completing within 2 min.

I wanted to check why does optimizer think that the stats are stale for all partitions for UNIT database.

Further investigation revealed that Histogram creation on few columns are being generating as developement team has been running some select aggregasion query that was accessing multiple partitions in UNIT region. As histograms are gathered , hence gather stats program was gathering stats on all partitions causing longer run time. Testing team was not running those SQL in QA region. I decided to stop gathering histogram in UNIT database and after that the program is completed 2 min.

dbms_stats.gather_table_stats(ownname=>'OIDFATM',tabname=>’FCT_COMMON_POLICY_SUMMARY’, METHOD_OPT=>'for all columns size 1', cascade=>TRUE, degree => DBMS_STATS.DEFAULT_DEGREE);

METHOD_OPT=>'for all columns size 1' is omitting histograms creation. In our QA environment, so far histograms are not created.

SQL Plan baseline from AWR

One of the concurrent program in EBS Development region was reported as running slow and the issue was fixed after i created SQL plan baselines after taking up better execution plan for 2 sql_id in AWR history reports. Below are the steps

SQL_ID

PLAN_HASH RESPONSE_IN_SEC

----------- -----------

----------------

36qn50vfsgzjh 2709963026 .000257534 AWR history -- 36,750 exec

36qn50vfsgzjh 584524445

.509160245 cursor memory -- 23,544 exec

a07y05uya8653 1271677127 .000286352 AWR history -- 17,356

exec

a07y05uya8653 4293861213 .681914067 cursor memory - 14398 exec

create

sql tuning set ( RUN all below step as SYS user)

exec

dbms_sqltune.create_sqlset(sqlset_name =>

'a07y05uya8653_sqlset_dev2',description => 'dev2_ebstax_sql2');

Load the sql plan from AWR & use filter option for any specific plan hash

value

declare

baseline_ref_cur

DBMS_SQLTUNE.SQLSET_CURSOR;

begin

open

baseline_ref_cur for

select

VALUE(p) from table(

DBMS_SQLTUNE.SELECT_WORKLOAD_REPOSITORY(&begin_snap_id,

&end_snap_id,'sql_id='||CHR(39)||'&sql_id'||CHR(39)||'',NULL,NULL,NULL,NULL,NULL,NULL,'ALL'))

p;

DBMS_SQLTUNE.LOAD_SQLSET('a07y05uya8653_sqlset_dev2',

baseline_ref_cur);

end;

/

OR

declare

baseline_ref_cur

DBMS_SQLTUNE.SQLSET_CURSOR;

begin

open

baseline_ref_cur for

select

VALUE(p) from table(

DBMS_SQLTUNE.SELECT_WORKLOAD_REPOSITORY(&begin_snap_id,

&end_snap_id,'sql_id='||CHR(39)||'&sql_id'||CHR(39)||' and plan_hash_value=1271677127',NULL,NULL,NULL,NULL,NULL,NULL,'ALL'))

p;

DBMS_SQLTUNE.LOAD_SQLSET('a07y05uya8653_sqlset_dev2',

baseline_ref_cur);

end;

/

Verify

sql plan value

select

* from table(dbms_xplan.display_sqlset('a07y05uya8653_sqlset_dev2','&sql_id'));

Load

into baseline from sql tuning set

set

serveroutput on

declare

my_int

pls_integer;

begin

my_int

:= dbms_spm.load_plans_from_sqlset (

sqlset_name

=> 'a07y05uya8653_sqlset_dev2',

sqlset_owner

=> 'SYS',

fixed

=> 'NO',

enabled

=> 'YES');

DBMS_OUTPUT.PUT_line(my_int);

end;

/

SQL>

SELECT SQL_HANDLE,CREATOR, PARSING_SCHEMA_NAME, CREATED, ENABLED,

ACCEPTED, FIXED, module FROM DBA_SQL_PLAN_BASELINES order by

created;

SQL_HANDLE

CREATOR

PARSING_SCHEMA_NAME

CREATED

ENA ACC FIX MODULE

------------------------------

---------- ------------------------------ ------------------------------ ---

--- --- ----------------------------------------------------------------

SQL_8d714864a2f6cad0

SYS

APPS

14-AUG-17 05.13.28.000000 PM YES YES NO ARHGNRW

SQL_851abbb354b962d0

SYS

APPS

14-AUG-17 05.21.43.000000 PM YES YES NO ARHGNRW

SELECT

NAME,OWNER,CREATED,STATEMENT_COUNT FROM DBA_SQLSET where name in

('36qn50vfsgzjh_sqlset_dev2','a07y05uya8653_sqlset_dev2');

NAME

OWNER

CREATED

STATEMENT_COUNT

------------------------------

------------------------------ ------------------------------ ---------------

36qn50vfsgzjh_sqlset_dev2

SYS

14-AUG-17

1

a07y05uya8653_sqlset_dev2

SYS

14-AUG-17

1

select

* from

table(dbms_xplan.display_sql_plan_baseline(sql_handle=>'SQL_8d714864a2f6cad0',format=>'basic'));

select

* from

table(dbms_xplan.display_sql_plan_baseline(sql_handle=>'SQL_851abbb354b962d0',format=>'basic'));

Drop

sql tuning set if required

BEGIN

DBMS_SQLTUNE.DROP_SQLSET( sqlset_name => 'a07y05uya8653_sqlset_dev2' );

END;

/

ORA-00607 Error 607 encountered recovering transaction db_block_checking

It was fine morning but by afternoon, it was an ooopss !! usual DBA days :) .

In our production Oracle EBS R12.1.3 PROD system, we received data corruption issues that caused the bounce SMON to go down and after which SMON could not recover the corrupt data block and instance could not be brought up. DB alert log we found below error

ORACLE Instance EBSXX (pid = 14) - Error 607 encountered while recovering transaction (7, 5) on object 46989.

Errors in file /move/prod/db/tech_st/11.2.0/admin/EBSXX_ebsp-pkg/diag/rdbms/ebsp/EBSXX/trace/EBSXX_smon_16908566.trc:

ORA-00607: Internal error occurred while making a change to a data block

ORA-00600: internal error code, arguments: [6856], [0], [65], [], [], [], [], [], [], [], [], []

PMON (ospid: 21430580): terminating the instance due to error 474

Thu Oct 12 07:53:55 2017

opiodr aborting process unknown ospid (23396816) as a result of ORA-1092

Thu Oct 12 07:53:55 2017

ORA-1092 : opitsk aborting process

Thu Oct 12 07:53:55 2017

System state dump requested by (instance=1, osid=21430580 (PMON)), summary=[abnormal instance termination].

System State dumped to trace file /move/XXX/trace/EBSXX_diag_30999036_20171012075355.trc

Instance terminated by PMON, pid = 21430580

The root cause was identified as DB parameter db_block_checking value was not set. You can follow below steps to recover that database. Connect as SYSDBA and using existing spfile start the database in nomount option.

SQL> Startup nomount ;

SQL> Create pfile='/tmp/corrupt.ora' from spfile ;

SQL> Shutdown immediate;

Modify the corrupt.ora and add below line at the end of the file to recover SMON with trace details

event = '10513 trace name context forever, level 2'

SQL> Startup pfile='/tmp/corrupt.ora'

Oracle support also suggested another option to set below parameter dynamically to start DB

set "_smu_debug_mode"=1024 to stop Transaction recovery (it can be done dynamically):

alter system set "_smu_debug_mode"=1024;

The above step will allow the database to startup by making SMON to skip the recovery of corrupt data block. Below SQL will help to find more detail of block affected by data corruption issue.

SQL> select useg.segment_name, useg.segment_id, useg.tablespace_name, useg.status from dba_rollback_segs useg where useg.segment_id = '7';

SQL> select XIDUSN, XIDSLOT, XIDSQN, USED_UBLK, USED_UREC from gv$transaction where XIDUSN = 7;

Edit your init@SID.ora and add below

_CORRUPTED_ROLLBACK_SEGMENTS= _SYSSMU7_1302289624$

In our case , we were lucky that we just truncated the table and ran EBS concurrent program that successfully repopulated the table. But in most cases, you have to recover the table

The issue can be prevented by setting below parameter in database

Alter system set db_block_checking = MEDIUM scope=both;

NOTE : Setting the value for db_block_checking to TRUE or FULL might add overhead of 1% to 10% in overall performance issue.

In our production Oracle EBS R12.1.3 PROD system, we received data corruption issues that caused the bounce SMON to go down and after which SMON could not recover the corrupt data block and instance could not be brought up. DB alert log we found below error

ORACLE Instance EBSXX (pid = 14) - Error 607 encountered while recovering transaction (7, 5) on object 46989.

Errors in file /move/prod/db/tech_st/11.2.0/admin/EBSXX_ebsp-pkg/diag/rdbms/ebsp/EBSXX/trace/EBSXX_smon_16908566.trc:

ORA-00607: Internal error occurred while making a change to a data block

ORA-00600: internal error code, arguments: [6856], [0], [65], [], [], [], [], [], [], [], [], []

PMON (ospid: 21430580): terminating the instance due to error 474

Thu Oct 12 07:53:55 2017

opiodr aborting process unknown ospid (23396816) as a result of ORA-1092

Thu Oct 12 07:53:55 2017

ORA-1092 : opitsk aborting process

Thu Oct 12 07:53:55 2017

System state dump requested by (instance=1, osid=21430580 (PMON)), summary=[abnormal instance termination].

System State dumped to trace file /move/XXX/trace/EBSXX_diag_30999036_20171012075355.trc

Instance terminated by PMON, pid = 21430580

The root cause was identified as DB parameter db_block_checking value was not set. You can follow below steps to recover that database. Connect as SYSDBA and using existing spfile start the database in nomount option.

SQL> Startup nomount ;

SQL> Create pfile='/tmp/corrupt.ora' from spfile ;

SQL> Shutdown immediate;

Modify the corrupt.ora and add below line at the end of the file to recover SMON with trace details

event = '10513 trace name context forever, level 2'

SQL> Startup pfile='/tmp/corrupt.ora'

Oracle support also suggested another option to set below parameter dynamically to start DB

set "_smu_debug_mode"=1024 to stop Transaction recovery (it can be done dynamically):

alter system set "_smu_debug_mode"=1024;

The above step will allow the database to startup by making SMON to skip the recovery of corrupt data block. Below SQL will help to find more detail of block affected by data corruption issue.

SQL> select useg.segment_name, useg.segment_id, useg.tablespace_name, useg.status from dba_rollback_segs useg where useg.segment_id = '7';

SQL> select XIDUSN, XIDSLOT, XIDSQN, USED_UBLK, USED_UREC from gv$transaction where XIDUSN = 7;

Edit your init@SID.ora and add below

_CORRUPTED_ROLLBACK_SEGMENTS= _SYSSMU7_1302289624$

In our case , we were lucky that we just truncated the table and ran EBS concurrent program that successfully repopulated the table. But in most cases, you have to recover the table

The issue can be prevented by setting below parameter in database

Alter system set db_block_checking = MEDIUM scope=both;

NOTE : Setting the value for db_block_checking to TRUE or FULL might add overhead of 1% to 10% in overall performance issue.

Friday, October 6, 2017

Migrate oracle database statistics

Steps to Export Import table database statistics for specific table using dbms_stat

1. Create the stats table in source instance where you have to export. Run as SYSTEM user

exec dbms_stats.create_stat_table('XX','STATS_EBSP');

2. Export the stats for table (AP_INV_TBL) in the stats table in source instance:

exec dbms_stats.export_table_stats('XX','AP_INV_TBL',NULL,'STATS_EBSP',NULL,TRUE,'AP');

3. Export the stats table in source instance using export utility:

exp system/*** tables='XX.STATS_EBSP' file=stat_ebsp.dmp log=expstat_ebsp.log

4. Import the stats table into the target instance using import utility

imp system/*** file=stat_ebsp.dmp fromuser=XX touser=XX ignore=y

5. Import the stats for the table into target instance:

exec dbms_stats.import_table_stats('XX', 'AP_INV_TBL',NULL,'STATS_EBSP',NULL,TRUE,'AP');

1. Create the stats table in source instance where you have to export. Run as SYSTEM user

exec dbms_stats.create_stat_table('XX','STATS_EBSP');

2. Export the stats for table (AP_INV_TBL) in the stats table in source instance:

exec dbms_stats.export_table_stats('XX','AP_INV_TBL',NULL,'STATS_EBSP',NULL,TRUE,'AP');

3. Export the stats table in source instance using export utility:

exp system/*** tables='XX.STATS_EBSP' file=stat_ebsp.dmp log=expstat_ebsp.log

4. Import the stats table into the target instance using import utility

imp system/*** file=stat_ebsp.dmp fromuser=XX touser=XX ignore=y

5. Import the stats for the table into target instance:

exec dbms_stats.import_table_stats('XX', 'AP_INV_TBL',NULL,'STATS_EBSP',NULL,TRUE,'AP');

Wednesday, October 4, 2017

Oracle database guaranteed restore point

As DBAs, we often come across a requirement when business want all the changes on a database to be restored, so they can retest everything from scratch. Database clone or RMAN restore is an answer to that. But both options are time consuming for small amount of change in database has to be reverted.

So to deal with this kind of situation, you can use Normal Restore point or Guaranteed restore point offered by Oracle databases.

1. standard Restore Point : It assigns a restore point name to an SCN or specific point in time.The control file stores the name of the restore point and the SCN.

2. Guaranteed Restore Point : It is same as normal restore point but only difference is that the guaranteed restore points never age out of the control file and must be explicitly dropped.

1. standard Restore Point : It assigns a restore point name to an SCN or specific point in time.The control file stores the name of the restore point and the SCN.

2. Guaranteed Restore Point : It is same as normal restore point but only difference is that the guaranteed restore points never age out of the control file and must be explicitly dropped.

Ensure

to shutdown entire MT & CM tier in EBS before doing this in DB tier. The database should have archive log enabled.

Check recovery parameters

show parameter recovery; (recovery_file_dest & recovery_file_dest_size should be set correctly)

Convert DB to archive log mode

Convert DB to archive log mode

select

name,database_role,open_mode,flashback_on,log_mode from v$database;

shutdown

immediate

startup

mount

alter

database archivelog à

DEV was in NOARCHIVELOG mode

alter

database open

archive

log list à Verify if archive

log is enabled

Create Guaranteed restore point (If flashback is not enabled then it enables it on its own)

SELECT

NAME, SCN, TIME, DATABASE_INCARNATION#, GUARANTEE_FLASHBACK_DATABASE,

STORAGE_SIZE FROM V$RESTORE_POINT WHERE GUARANTEE_FLASHBACK_DATABASE='YES';

CREATE RESTORE POINT TEST_BEFORE GUARANTEE FLASHBACK DATABASE; à

creates restore point

Flashback database to restore point

shutdown

immediate;

startup

mount;

FLASHBACK DATABASE TO RESTORE POINT TEST_BEFORE;

alter

database open resetlogs; ( This may take few mins based on amount of changes in database)

select

log_mode,flashback_on from v$database;

Verify flashback logs

select * from V$FLASHBACK_DATABASE_LOG;

select * from V$FLASHBACK_DATABASE_STAT;

select * from V$FLASHBACK_DATABASE_LOGFILE;

Monday, September 25, 2017

Migrate SQL Plan Baselines

You can now manually load SQL baseline of a specific sql_id from a different better performing database (e.g. SIT) to another database where you have slowness issue for that specific sql or sql_id.

Oracle notes and most other blogs

did not have exact correct steps to execute that. You can refer this step by step guide.

Migrate or Export / Import SQL Plan Baseline across Database:

In my DEV, environment I had already created SQL plan baselines for this specific sql_id to stabilize the plan. In my SIT, the execution plan for the same sql_id was not good. I could not find better execution plans for this specific sql_id either in cursor memory or historical reports in SIT. Time was running out , hence i decided to get better baseline from DEV and import in SIT to influence optimizer to perform better.

- Create staging table in

DEV as we want to export the SQL baseline from here and execute the package as SYS or SYSDBA user

exec DBMS_SPM.CREATE_STGTAB_BASELINE(table_name

=> 'SQLBASE_SIT', table_owner=>'APPS');

- Pack the SQL plan

baselines for the sql_id that you want to export from the SQL

management base into the staging table. Execute as SYS user

DECLARE

my_plans number;

BEGIN

my_plans :=

DBMS_SPM.PACK_STGTAB_BASELINE(

table_name => 'SQLBASE_SIT',

table_owner=>'APPS',

plan_name

=> 'SQL_PLAN_8a6pvqdabksqh4673ac44',

sql_handle => 'SQL_851abbb354b962d0');

END;

/

- Export the table in DEV2

and import it in SIT1

exp system/***

tables='APPS.SQLBASE_SIT' file=sit_sqlbaseline01.dmp

log=expsit_sqlbaseline01.log à

DEV

imp system/****

file=sit_sqlbaseline01.dmp log=impsqlbase01.log full=y à SIT

- Unpacks the specific sql_handle and plan_name stored in the staging table 'SQLBASE_SIT'. Execute only as SYS user. Importing as SYSTEM user would not work and that's the key.

DECLARE

my_plans number;

BEGIN

my_plans :=

DBMS_SPM.UNPACK_STGTAB_BASELINE(

table_name => 'SQLBASE_SIT',

table_owner=>'APPS',

fixed => 'NO',

fixed => 'NO',

plan_name =>

'SQL_PLAN_8a6pvqdabksqh4673ac44',

sql_handle => 'SQL_851abbb354b962d0');

END;

/

- Verify if the sql_id (v$sql ) have

picked up sql plan baseline in SIT1 and it has picked up

order by last_active_time desc;

------- ---------- ------------- --------------- ----------

---------- ---------- ------------------------------

1 APPS

36qn50vfsgzjh

2709963026

38681 25

.000377 SQL_PLAN_8uwa8ckjgdkqh5557b5eb

Sometimes, you may find

it odd that optimizer has not picked up the baseline even after importing. In

those cases, you can flush the address of sql from shared pool , so that the

baselines is picked up.

- Find the SQL

address & hash_value ( not plan_hash_value)

select address, hash_value, plan_hash_value, sql_id,executions,

fetches,(SUM(elapsed_time)/power(10,6))/SUM(executions) avg_response_in_seconds

from gv$sqlarea where sql_id in ('36qn50vfsgzjh','a07y05uya8653') GROUP BY

address, hash_value,plan_hash_value,sql_id,executions, fetches;

ADDRESS HASH_VALUE

PLAN_HASH_VALUE SQL_ID EXECUTIONS

FETCHES AVG_RESPONSE_IN_SECONDS

---------------- ---------- --------------- -------------

---------- ---------- -----------------------

0700010265D3CD58 3716677168 584524445

36qn50vfsgzjh 6056 11800

.876101928

- Flush the specific SQL

from the pool

exec DBMS_SHARED_POOL.PURGE ('0700010265D3CD58,3716677168', 'C');

DROP BASELINE

If there is ever a need to drop the SQL baseline, you can use below

If there is ever a need to drop the SQL baseline, you can use below

DECLARE

my_plans number;

BEGIN

my_plans :=DBMS_SPM.DROP_SQL_PLAN_BASELINE (

plan_name => 'SQL_PLAN_8a6pvqdabksqh4673ac44',

sql_handle => 'SQL_851abbb354b962d0');

END;

/

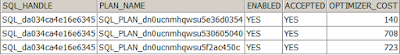

EVOLVE BASELINE

By default the SQL baselines are evolved unless they marked as FIXED. Hence one specific SQL_Handle can have multiple plan_name as they are evolved. We can run below procedure as SYS or SYSTEM manually and if there are any better plan then baseline plan that has been evolved then it will be accepted.

SQL> SET LONG 100000;

SQL> SELECT

DBMS_SPM.evolve_sql_plan_baseline(sql_handle => 'SQL_da034ca4e16e6345') from

dual;

DBMS_SPM.EVOLVE_SQL_PLAN_BASELINE(SQL_HANDLE=>'SQL_DA034CA4E16E6345')

--------------------------------------------------------------------------------

Plan was

verified: Time used 7.506 seconds.

Plan failed

performance criterion: 1.25 times better than baseline plan.

Baseline Plan Test Plan Stats Ratio

------------- --------- -----------

Execution Status:

COMPLETE

COMPLETE

Rows Processed:

3

3

Elapsed Time(ms):

3.316

4.272 .78

CPU Time(ms):

2.043

1.891

1.08

Buffer Gets:

424 339

1.25

Physical Read

Requests: 0

0

DBMS_SPM.EVOLVE_SQL_PLAN_BASELINE(SQL_HANDLE=>'SQL_DA034CA4E16E6345')

--------------------------------------------------------------------------------

Physical Write

Requests: 0

0

Physical Read

Bytes: 0

0

Physical Write

Bytes: 0

0

Executions:

1 1

Plan:

SQL_PLAN_dn0ucnmhqwsu5e36d0354

------------------------------------

Plan was

verified: Time used 8.31 seconds.

Plan passed

performance criterion: 1.58 times better than baseline plan.

Plan was changed

to an accepted plan.

- Below SQL show that same SQL_handle has 3 Baslines accepted and baseline with best optimizer cost would be picked up.

Saturday, September 16, 2017

Cancel concurrent request "could not lock request" message

As part of our day to day Apps DBA job, we get the ask from other product support teams to cancel concurrent requests based on business requirement. However, at times we get into a situation where even we are unable to cancel request or it simply just hangs. For Example:-

- When

you cancel or terminate concurrent request from SYSADMIN it

throws "could not lock request"

- Even

running the below sql in backend to terminate the request also hangs doing

nothing

update apps.fnd_concurrent_requests set phase_code='C' , status_code='X' where request_id=12345;

- When the above update state was running in session 1 , you can open duplicate session 2 and run the below statement to find out which SID is causing the lock to session 1 ( "update" statement). Then clear the blocking session

SELECT inst_id,

DECODE(request,0,'Holder: ','Waiter: ')||sid sess, id1, id2, lmode, request,

type FROM GV$LOCK

WHERE (id1, id2, type)

IN (SELECT id1, id2, type FROM GV$LOCK WHERE request>0) ORDER BY

id1, request;

- Some times, you may not find any database blocking session, the option would be to find any FNLIBR process that is still accessing concurrent request id. In AIX system, it's easy, run fuser command

fuser -u $APPLCSF/$APPLLOG/l12345.req 10682440(oracleebs)

- LINUX system, you can use below query

Set Pages 1000

Set head on

Column Manager Format A12

Column Request Format 999999999

Column Program Format A30

Column User_Name Format A15

Column Started Format A15

Column FNDLIBR Format A9

prompt Managers that is running a request and FNDLIBR PROCESS;

select substr(Concurrent_Queue_Name,

Fpro.OS_PROCESS_ID "FNDLIBR",

substr(Concurrent_Program_

from apps.Fnd_Concurrent_Queues Fcq, apps.Fnd_Concurrent_Requests Fcr,

apps.Fnd_Concurrent_Programs Fcp, apps.Fnd_User Fu, apps.Fnd_Concurrent_Processes Fpro

where

Phase_Code = 'R' And Status_Code <> 'W' And

Fcr.Controlling_Manager = Concurrent_Process_Id and

(Fcq.Concurrent_Queue_Id = Fpro.Concurrent_Queue_Id and

Fcq.Application_Id = Fpro.Queue_Application_Id ) and

(Fcr.Concurrent_Program_Id = Fcp.Concurrent_Program_Id and

Fcr.Program_Application_Id = Fcp.Application_Id ) and

Fcr.Requested_By = User_Id and Fcr.request_id=&request_id

order by Started;MANAGER REQUEST USER_NAME FNDLIBR PROGRAM S STARTED

------------ ---------- --------------- --------- --------- ------------------------------ - ---------------

STANDARD 12345 XXYYY 9240602 XLAACCPB

Now check the process in CM tier

ps -ef|grep 9240602|grep -v grep

oraebs 9240602 8060984 0 Aug 25 - 0:49 FNDLIBR

Once you have FNDLIBR process details, you can find the corresponding database session with below SQL and in our case the session has been waiting for a very long time 23409 secs

select ses.sid,ses.serial#, proc.spid,ses.sql_id,ses.

SID SERIAL# SPID SQL_ID PROCESS LAST_CALL_ET EVENT

------ ------- ------ ------------- ------------------------ ------------ ---------------

804 14487 51118106 fnpyvpk41nd5s 9240602 243409 Streams AQ: waiting for messages in the queue

After clearing the DB session , you can kill the concurrent request from backend

alter system kill session '804,14487,@1'

update apps.fnd_concurrent_requests set phase_code='C' , status_code='X' where request_id=12345;

commit;

Subscribe to:

Comments (Atom)

Oracle database performance notes

Master Note: Database Performance Overview (Doc ID 402983.1) SQL Tuning Health-Check Script (SQLHC) (Doc ID 1366133.1) Achieving Optimal Per...

-

It was fine morning but by afternoon, it was an ooopss !! usual DBA days :) . In our production Oracle EBS R12.1.3 PROD system, we rece...

-

As part of our day to day Apps DBA job, we get the ask from other product support teams to cancel concurrent requests based on business ...